How I Won The Women in TensorFlow Hackathon Sponsored By Google

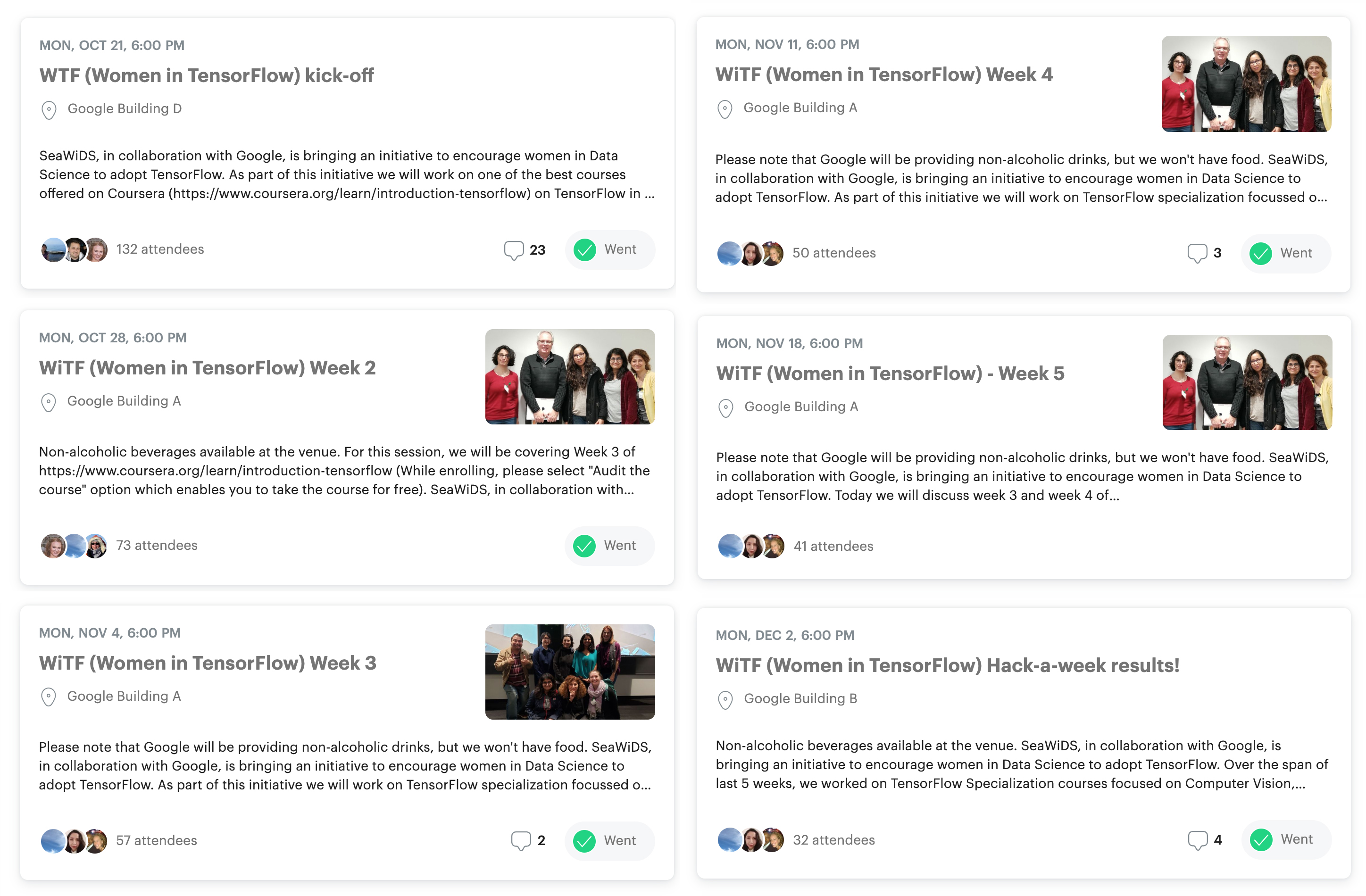

It all started when I came across a Meetup event organized by the Seattle Women In Data Science (SeaWIDS) community in collaboration with Google to encourage women in Data Science to adopt TensorFlow.

For someone who’s been studying data science by myself for about a year, this was a learning opportunity I couldn’t miss out on.

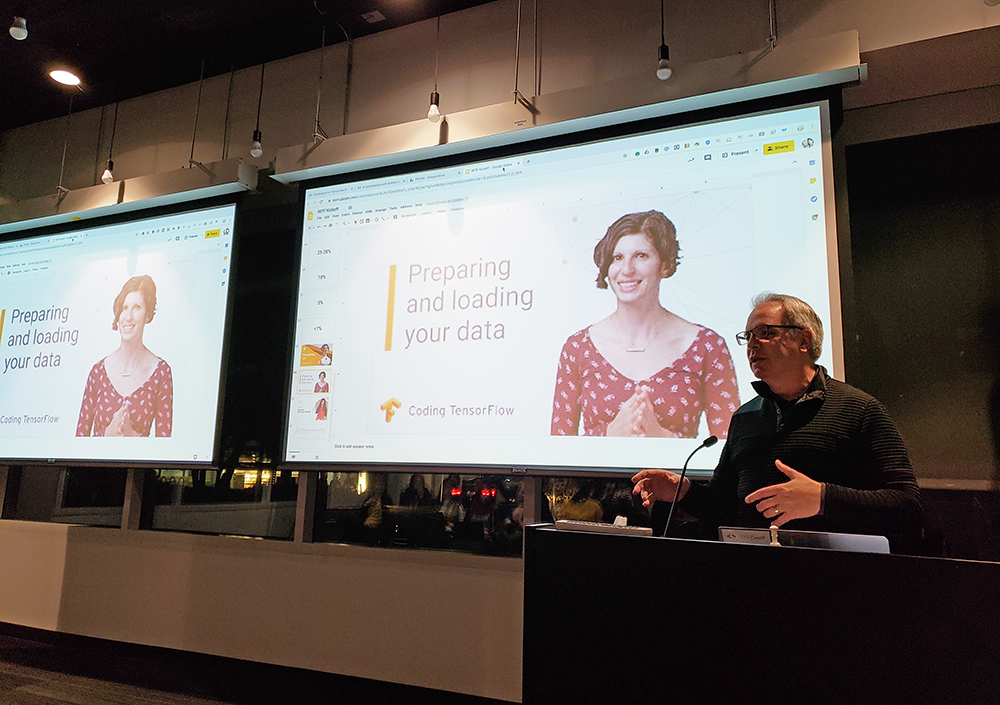

Taking place in the Google campus in Kirkland, WA, the event was set to go through the TensorFlow in Practice specialization on Coursera in a span of 6 weeks, the courses were delivered to the participants by the course author himself Laurence Moroney.

Upon completion of the four courses, all participants will be granted the Coursera certificate and will have the opportunity to participate in a hackathon sponsored by Google and get a chance to win a Google Pixel 3A smartphone and Coral Boards.

Deep Learning was a bit overwhelming for me to dive into, but after reading the Hands-On Machine Learning with Scikit-Learn and TensorFlow book by Aurélien Géron, I started to put things into perspective and discovered more about TensorFlow. But still, I knew that I was lacking practical experience using Tensorflow, and this course was exactly what I needed.

Having no prior experience with TensorFlow nor building Deep Neural Networks (DNN), the idea of participating in the Hackathon didn’t even cross my mind, little did I know that by the end of the course I would be able to build, test, and train a complete DNN.

Having gone through the course material provided, and thanks to the impressive teaching skills of our instructor Laurence Moroney, followed by a month of practice, building deep neural networks became easy. As the Hackathon day was approaching I started to feel confident enough in my abilities that I decided to take part of it!

Since the goal of the event was to promote women in Data Science, I knew I wanted to consider a project that’s also related to the cause, but at the same time a project that solves a problem and adds value.

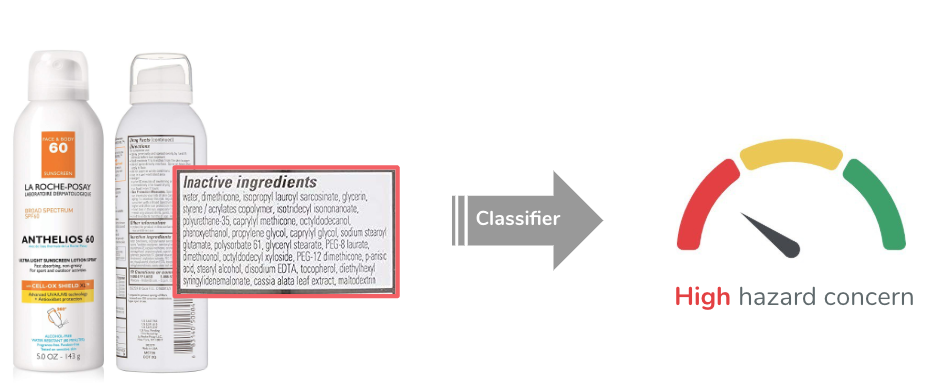

While brainstorming project ideas, I took time to reflect on solving actual problems I have: I use cosmetics and personal care products as part of my daily routine. For someone who has sensitive skin as well as being allergic to chemical ingredients in cosmetic products, I was tired of memorizing and looking up the name of every ingredient each time I wanted to buy a product to know if it’s safe for use.

Diving deeper into this topic, I was surprised to know how unregulated the cosmetic industry is in the USA, the FDA does not require cosmetic products and ingredients to be approved to go on market, as stated in their website:

The Federal Food, Drug, and Cosmetic Act does not require cosmetic products and ingredients to be approved by FDA before they go on the market, except for color additives that are not intended for use as coal tar hair dyes…

Also, according to the California Department and Public Health California Safe Cosmetics Program:

595 cosmetics manufacturers have reported using 88 chemicals that have been linked to cancer, birth defects or reproductive harm in more than 73,000 products.

Hence the idea of building a cosmetic classifier (NLP DNN) that would arrange products according to their hazard concern level based on the ingredients it contains:

- High hazard concern

- Moderate hazard concern

- Low hazard concern

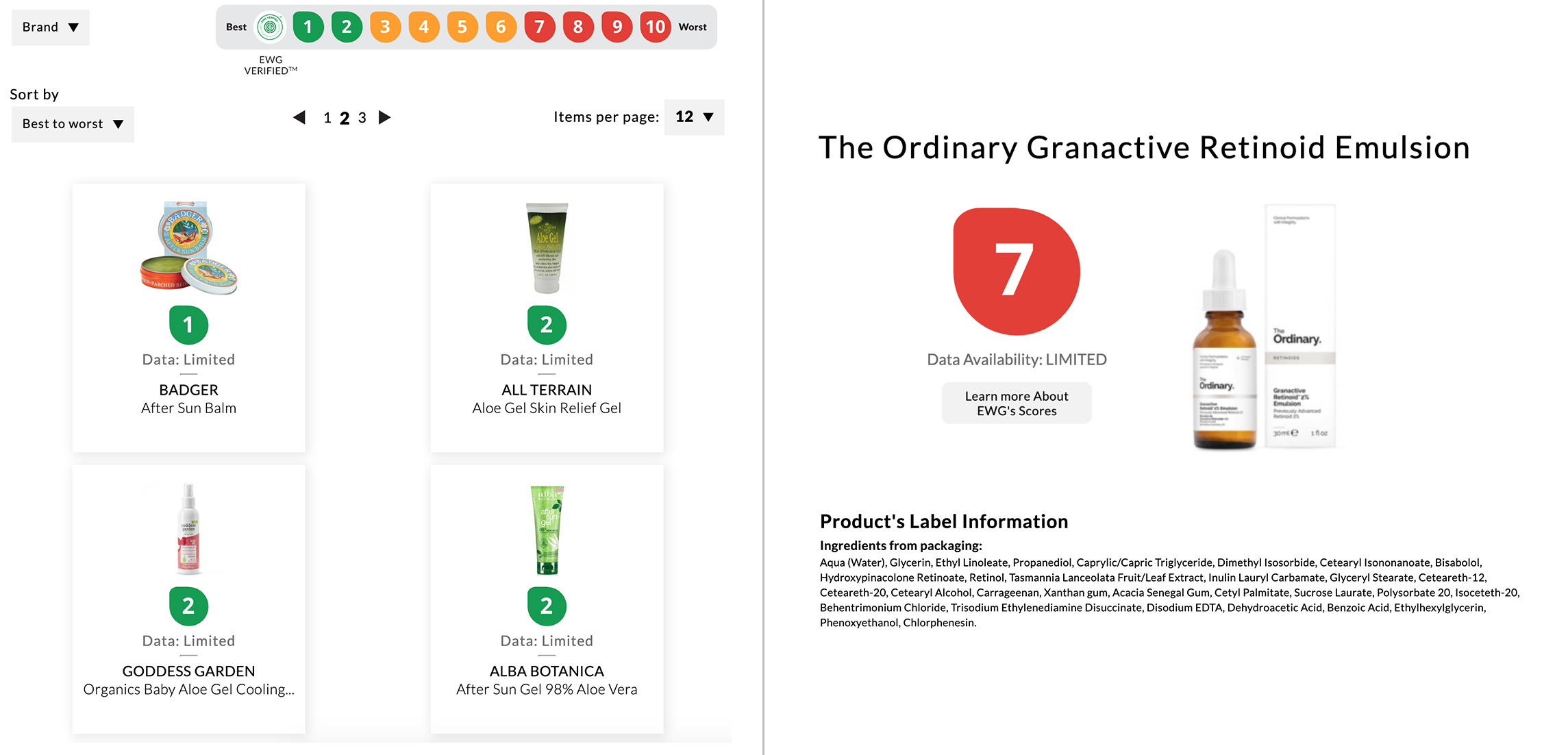

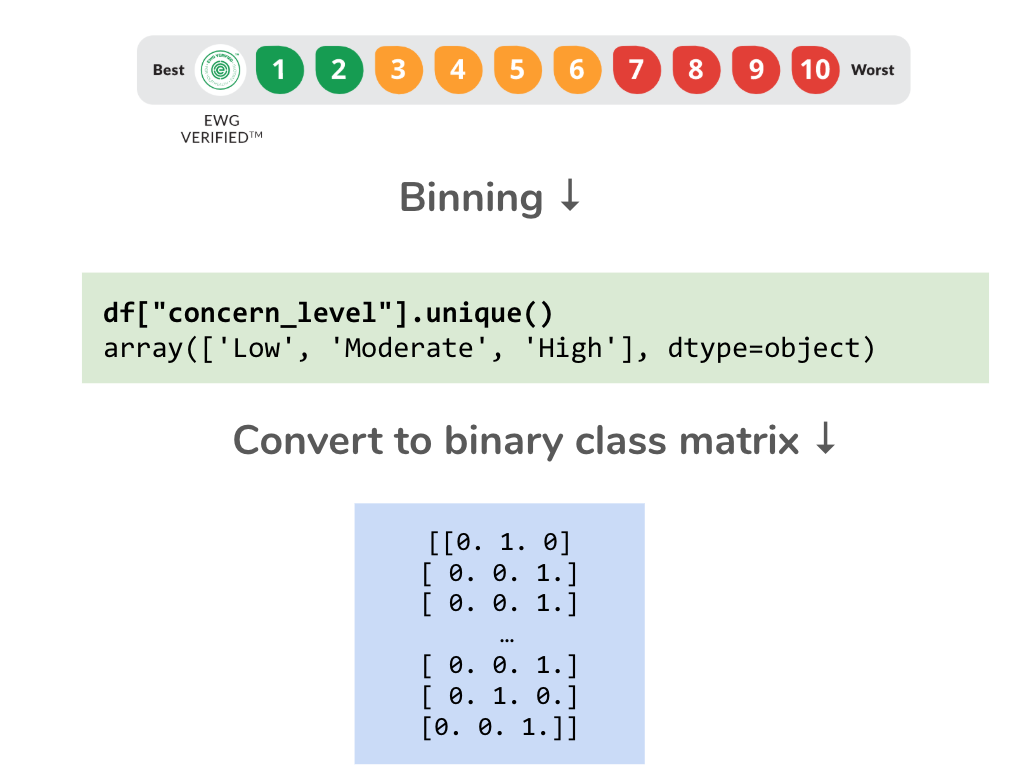

To my luck, the labeled data was already available on the EWG Skin Deep® Cosmetics Database (database for personal care and beauty products that indexes and scores products based on ingredients hazard), for data collection, I scraped around 5000 product items, for each product I collected:

- Product name

- List of ingredients from packaging

- EWG defined hazard concern score 1-10

Once I had the data ready, I started exploring it.

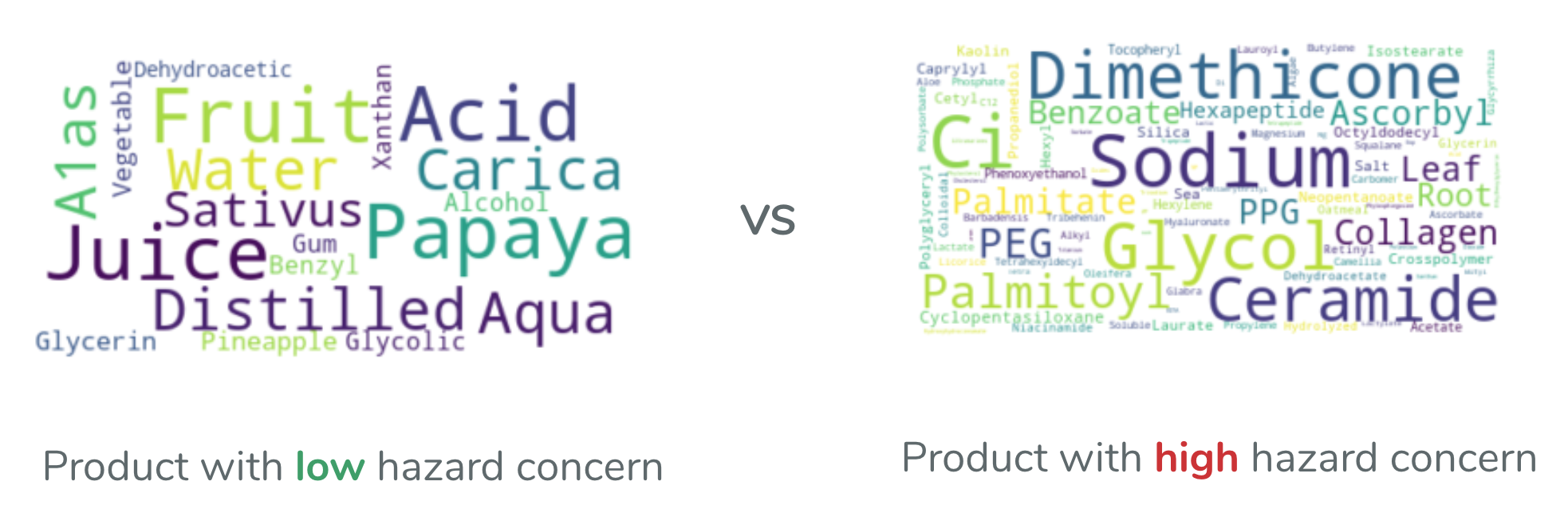

I plotted the Word Cloud for a product with a low hazard score and a product with high hazard score to compare their ingredients reason for such score, in the example below, the figure on the left represents a low hazard product containing mainly water, fruit, juice and vegetable extract. As for the figure on the right, it represents a high product containing mostly synthetic, silicones (dimethicone) and petroleum-based compounds (PEG, PPG).

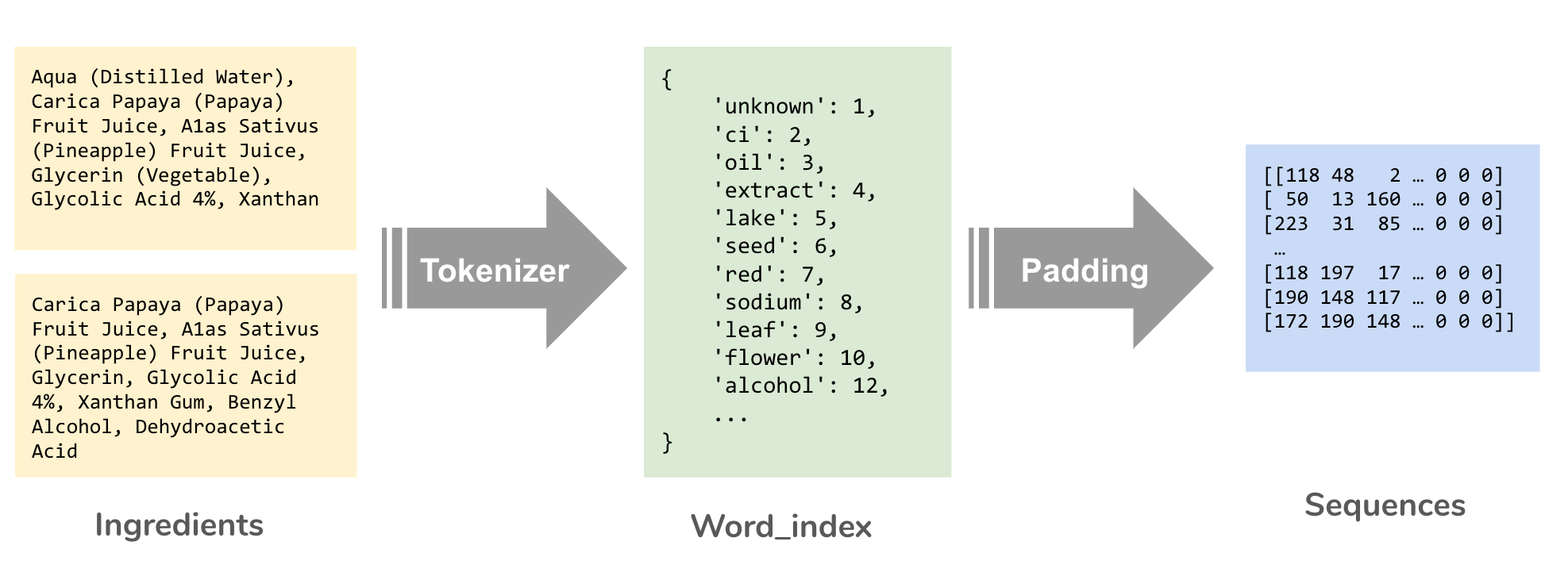

As for the data preprocessing step, I applied the tokenizer and padding to the ingredients dataset:

Then I binned the concern score into the three levels/categories that I defined, which I hot encoded into a binary class matrix afterwards

For the model building, after multiple trials with different layers and parameters I ended up with this DNN model:

model = tf.keras.Sequential([

tf.keras.layers.Embedding(input_dim=4609, output_dim=50),

tf.keras.layers.GlobalMaxPooling1D(),

tf.keras.layers.Dense(100, activation = 'relu'),

tf.keras.layers.Dense(3, activation = 'softmax')

])After training the model, I tested it with input ingredients that it had never seen and the model had correctly classified the products into the three categories.

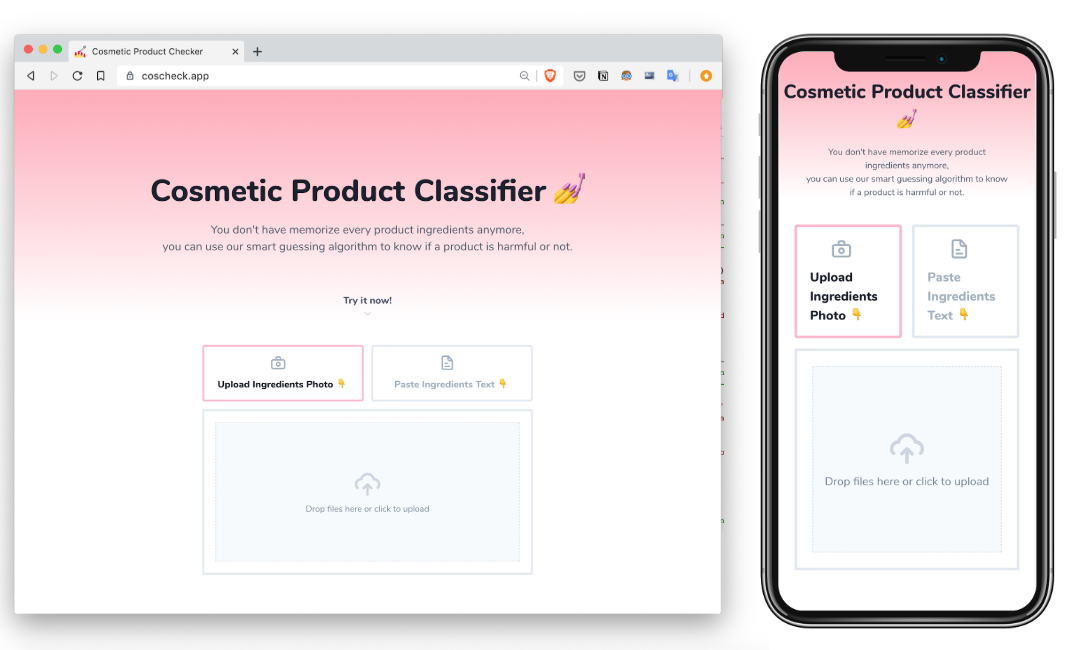

Instead of stopping here, I wanted to make this classifier convenient for everyday use and available for everyone. That’s why I went ahead and decided to build a web app anyone can use by pasting the list of ingredients of a product and the model will classify it and display the category it belongs to as a result.

Since it’s not convenient to write the list of ingredients while at the store, I decided to add an extra feature to the app that would allow uploading a photo, automatically extract the text from the image using an OCR library and feed it to the model for prediction.

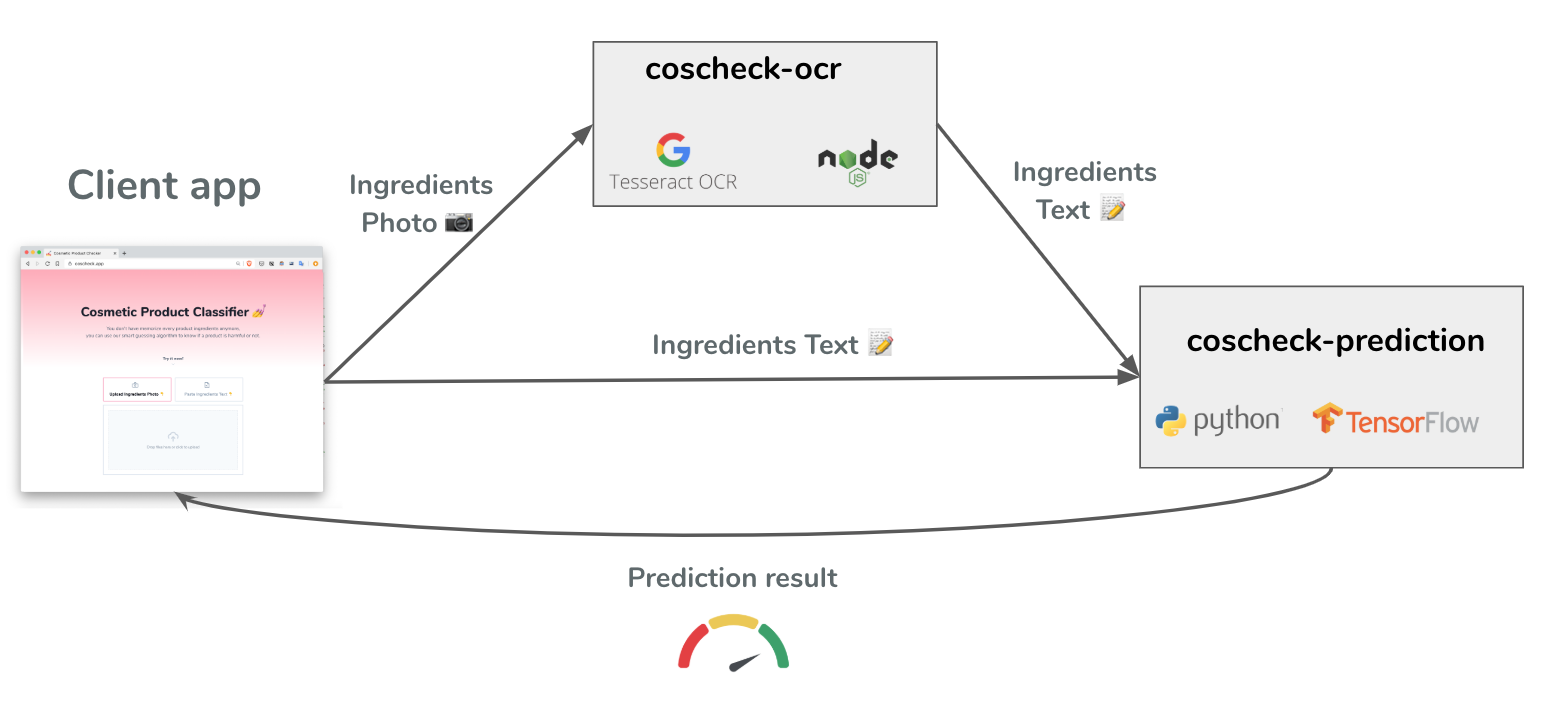

Here’s an overview of the architecture of the app and how its components fit together:

- web client Frontend application, web and mobile friendly built with Reactjs

- coscheck-ocr Backend server built with Nodejs that performs the OCR using tesseract on the image passed in as a POST request.

- coscheck-prediction Backend server built with Python and TensorFlow client that performs the model prediction on the text passed in as a POST request.

Here’s a Demo showing the application working:

On the left is an example of using text as input, and on the right is an example using a photo on which the text is automatically extracted using the OCR library on the backend.

Also, for convenience here’s the mobile web app demo:

Disclaimer: this not a real product and should not be relied upon.

Feel free to experiment and play with it too at https://coscheck.app and reach out to me if you have any feedback. As for the code, it’s all on GitHub.

Fast forward to the day of the hackathon winners announcement, I gave my presentation and my project was announced as a winner, and sure I was very excited!

Lastly, we took a souvenir group photo and it was a very happy moment for us all!

This was an incredible journey for me, it couldn’t be possible without the efforts of the Seattle Women in Data Science community. I want to thank all the organisers who tirelessly worked on making sure everything was successful, I also want to thank Laurence Moroney from Google for the incredible opportunity he gave us to learn about TensorFlow. And not to forget all the wonderful women who participated in this experience.